The potential of Artificial Intelligence (AI) and Machine Learning (ML) seems almost limitless in its ability to infer and promote new sources of customer, product, service, operational, environmental and societal value. If your company is to be competitive in the economy of the future, AI must be at the heart of your business operations.

A study by Kearney entitled “The Impact of Analytics in 2020“Highlights the untapped profitability and business impact for companies looking for a rationale to accelerate their investments in data science (AI / ML) and data management:

- Explorers could increase their profitability by 20% if they were as effective as executives

- Followers could improve profitability by 55% when they were as effective as employees

- Latecomers could increase profitability by 81% if they were as effective as Leaders

The business, operational, and social impact could be staggering, with the exception of one major organizational challenge – data. None other than AI’s godfather, Andrew Ng, discovered that data and data management is hindering businesses and society from realizing the potential of AI and ML:

“The model and code for many applications are basically one problem solved. Now that the models have advanced to a point, we need to get the data working too. ”- Andrew Ng

Data is at the heart of training AI and ML models. And high quality, trustworthy data orchestrated through highly efficient and scalable pipelines means that AI can enable these compelling business and operational outcomes. Just as a healthy heart requires oxygen and reliable blood flow, a steady stream of purified, accurate, enriched, and trustworthy data is also important to the AI / ML engines.

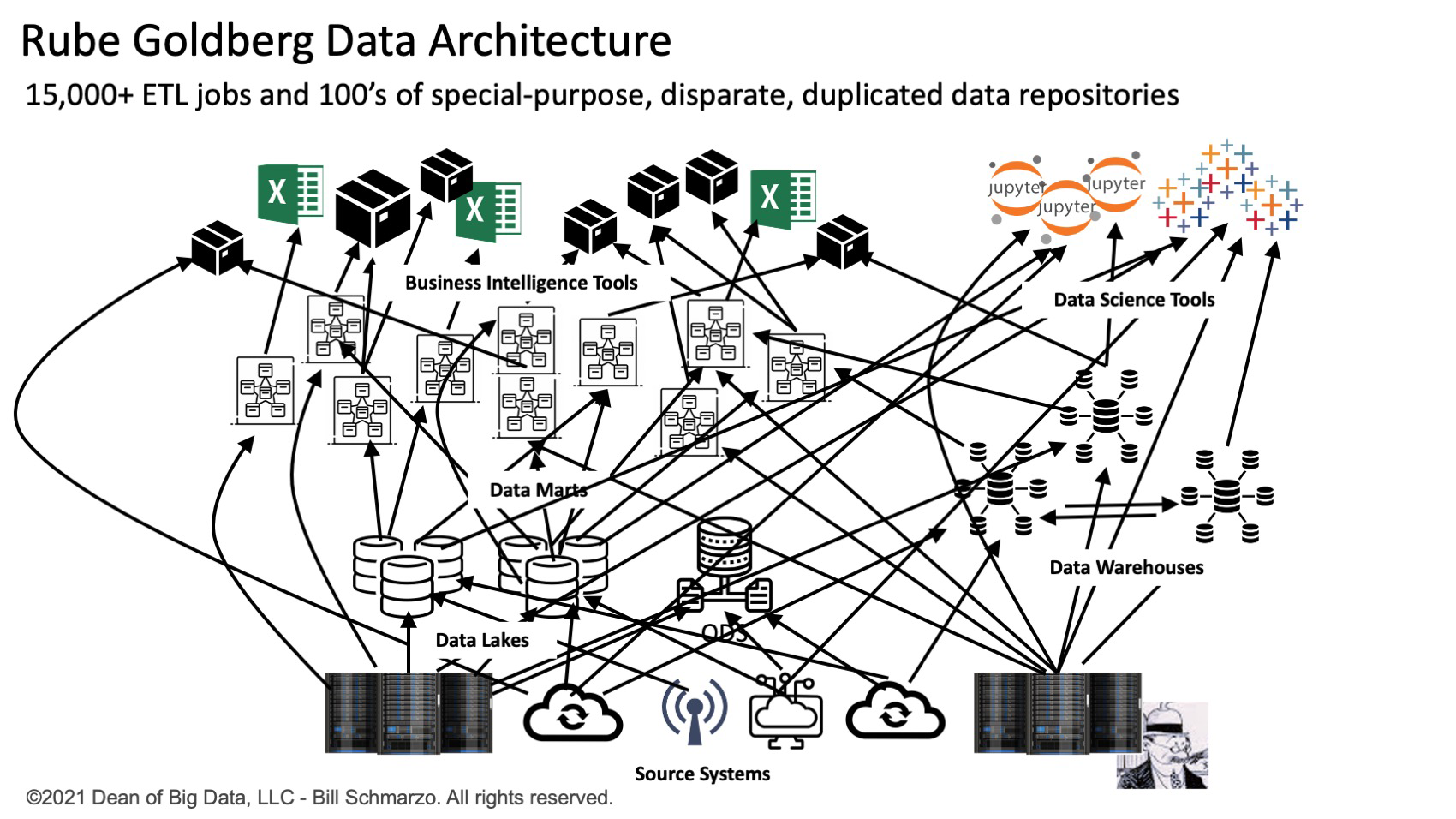

For example, a CIO has a team of 500 data engineers who manage over 15,000 extraction, transform, and load (ETL) jobs required for capturing, moving, aggregating, standardizing, and reconciling data in hundreds of specialized data repositories (data marts, data warehouses , Data lakes and data lakehouses). They perform these tasks in the company’s operational and customer-centric systems under ridiculously tight Service Level Agreements (SLAs) to support their growing number of diverse data consumers. It seems that Rube Goldberg must surely have become a data architect (Figure 1).

Figure 1: Rube Goldberg’s data architecture

Figure 1: Rube Goldberg’s data architecture

Reducing the debilitating spaghetti architecture structures of unique, specialty, static ETL programs for moving, cleaning, reconciling, and transforming data significantly reduces the “time to learn” that companies need to take full advantage of the unique economic properties of data. the “the most valuable resource in the world“According to The Economist.

Creation of intelligent data pipelines

The purpose of a data pipeline is to automate and scale common and repetitive data collection, transformation, movement, and integration tasks. A properly engineered data pipeline strategy can accelerate and automate the processing associated with collecting, cleaning, transforming, enriching, and moving data into downstream systems and applications. As the volume, diversity and speed of data continue to grow, the need for data pipelines that can be linearly scaled within cloud and hybrid cloud environments becomes increasingly important to the operation of a company.

A data pipeline refers to a series of data processing activities that integrate both operational and business logic to perform advanced sourcing, transformation, and loading of data. A data pipeline can either be scheduled, executed in real time (streaming), or triggered by a predetermined rule or set of conditions.

In addition, logic and algorithms can be integrated into a data pipeline to create an “intelligent” data pipeline. Smart pipelines are reusable and expandable assets that can be specialized in source systems and perform the necessary data transformations to support the unique data and analysis requirements for the target system or application.

As machine learning and AutoML become more common, data pipelines will become increasingly intelligent. Data pipelines can move data between advanced data enrichment and transformation modules, where neural network and machine learning algorithms can create more complex data transformations and enrichments. This includes segmentation, regression analysis, clustering, and the creation of advanced indexes and propensity scores.

Finally, one could integrate AI into the data pipelines in such a way that it can continuously learn and adapt based on the source systems, required data transformations and enrichments, and the evolving business and operational requirements of the target systems and applications.

For example: A smart healthcare data pipeline could analyze the grouping of codes for Diagnosis Related Healthcare Groups (DRG) to ensure the consistency and completeness of DRG submissions and detect fraud as DRG data moves through the data pipeline from the source become system to the analytical systems.

Recognize business value

Chief data officers and chief data analytic officers face the challenge of unlocking the business value of their data – applying data to the business for quantifiable financial impact.

The ability to deliver high quality, trustworthy data to the right data consumer at the right time to enable more timely and accurate decisions will be a key differentiator for today’s data-rich organizations. A Rube-Goldberg system of ELT scripts and various special analysis-centric repositories hinders a company’s ability to achieve this goal.

Learn more about intelligent data pipelines in Modern Enterprise Data Pipelines (eBook) from Dell Technologies here.

This content was created by Dell Technologies. It was not written by the editorial staff of MIT Technology Review.